Github Actions + Digital Ocean + Elixir = ❤️

Today I performed an experiment with the new not so new CI from Github: Github Actions,

implementing on my blog (yes, the one you are reading).

The project has here,

So, I ran this test with:

- Simple Droplet on DigitalOcean ($5)

- Elixir + Phoenix

- Docker + DockerHub - Github Actions

Well, for this experiment, I decided to use docker to facilitate the development/deploy and the the ease of scaling on K8s.

Of course, this blog will never have the need to use any container orchestrator, but, it is good practice.

Before to check out this experiment, is worth to make some things clear:

- It was a test performed in ~3h.

-

There is downtime - the time to kill the

containerand move it up. e.g:docker kill blog_prod. -

There is a previous configuration in

nginxthat I’ll leave at end of article. - Don’t has necessity to use DockerHub, but I like the portability that it brings me.

First of all

Let’s create a Dockerfile to perform the build on pipeline.

In the project I’ve a Dockerfile for development and one for production (prod.dockerfile), and in this article I’ll show the

prod.dockerfile

To summarize the Dockerfile, we’ve the following commands:

ENV PORT=4000 \

MIX_ENV=prod \

SECRET_KEY_BASE=${SECRET_KEY_BASE} \

DATABASE_URL=${DATABASE_URL}

CMD ["mix", "phx.server"]Which we pass an sensible environment variable to build the Dockerfile (SECRET_KEY_BASE, DATABASE_URL).

Go to Action

So, in brief summary we need that: When perform a push to master we build the new image and send it to our repository at DockerHub. After that, we should enter on _droplet and move the container up on the expected port by nginx (4000)_.

Important points to consider:

- We should send in some security way the credentials to access the database and the secret_key of Phoenix.

- To perform the push to repository we must realize the login on docker.

Therefore, let’s create our file actions into .github/workflows/actions.yml and add the follow command:

on:

push:

branches:

- masterTo specific that this action should be run when any push is made to the master branch.

And we’ve to create our first job:

...

jobs:

build:

name: Build, push

runs-on: ubuntu-latest

steps:

- name: Checkout master

uses: actions/checkout@master

We defined a build job called Build, push that will run in a ubuntu system.

After that, we created our first step: actions/checkout@master that is responsible to make the pull from master.

Let’s continue with our steps…

steps:

...

- name: Build container image

run: docker build -t rafaelgss/projects:blog-latest -f prod.dockerfile .

- name: Docker Login

env:

DOCKER_USERNAME: ${{ secrets.DOCKER_USERNAME }}

DOCKER_PASSWORD: ${{ secrets.DOCKER_PASSWORD }}

run: docker login -u $DOCKER_USERNAME -p $DOCKER_PASSWORD

- name: Push image to Docker Hub

run: docker push rafaelgss/projectsWell… We’ve added an interesting sequence of steps now, which are:

- Production build image (prod.dockerfile) with the tag.

- Login on DockerHub

- Image push to DockerHub

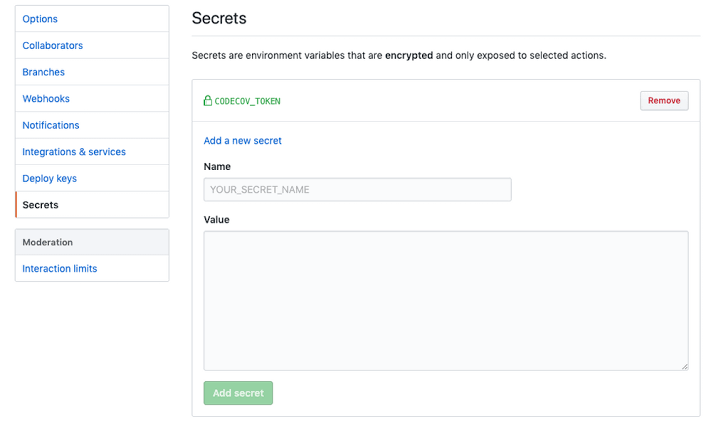

Note that in step 2 we use ${{ secrets.* }}. These are the secrets defined in the project, that’s where

we will store all sensitive information in a safe way.

Well, we were able to build and send the image to the DockerHub… Now, let’s go to the main step, the deployment.

First, create a new job, call it Deploy, and set it to run after the BUILD.

deploy:

needs: build

name: Deploy

runs-on: ubuntu-latestAnd then, create our steps:

steps:

- name: executing remote ssh commands using key

uses: appleboy/ssh-action@master

env:

VIRTUAL_HOST: 'blog.rafaelgss.com.br'

SECRET_KEY_BASE: ${{ secrets.SECRET_KEY_BASE }}

DATABASE_URL: ${{ secrets.DATABASE_URL }}

with:

host: ${{ secrets.HOST }}

username: ${{ secrets.USERNAME }}

key: ${{ secrets.key }}

port: ${{ secrets.PORT }}

envs: VIRTUAL_HOST,SECRET_KEY_BASE,DATABASE_URL

script: |

docker pull rafaelgss/projects:blog-latest

docker kill blog_prod

docker rm blog_prod

docker run -d -p 4000:4000 --name blog_prod -e VIRTUAL_HOST="$VIRTUAL_HOST" -e SECRET_KEY_BASE="$SECRET_KEY_BASE" -e DATABASE_URL="$DATABASE_URL" -t rafaelgss/projects:blog-latestIn this single step, we create an ssh connection with our droplet and execute what is in the script.

- We performed the image pull made in the previous job.

- We kill the container in execution (if any) – Because of that, there is downtime.

- Remove the previous container.

- Move up the new container with a new image, passing the environment variable needed (stored in Secrets)

This is all! Our action.yml looks like this:

on:

push:

branches:

- master

jobs:

build:

name: Build, push

runs-on: ubuntu-latest

steps:

- name: Checkout master

uses: actions/checkout@master

- name: Build container image

run: docker build -t rafaelgss/projects:blog-latest -f prod.dockerfile .

- name: Docker Login

env:

DOCKER_USERNAME: ${{ secrets.DOCKER_USERNAME }}

DOCKER_PASSWORD: ${{ secrets.DOCKER_PASSWORD }}

run: docker login -u $DOCKER_USERNAME -p $DOCKER_PASSWORD

- name: Push image to Docker Hub

run: docker push rafaelgss/projects

deploy:

needs: build

name: Deploy

runs-on: ubuntu-latest

steps:

- name: executing remote ssh commands using key

uses: appleboy/ssh-action@master

env:

VIRTUAL_HOST: 'blog.rafaelgss.com.br'

SECRET_KEY_BASE: ${{ secrets.SECRET_KEY_BASE }}

DATABASE_URL: ${{ secrets.DATABASE_URL }}

with:

host: ${{ secrets.HOST }}

username: ${{ secrets.USERNAME }}

key: ${{ secrets.key }}

port: ${{ secrets.PORT }}

envs: VIRTUAL_HOST,SECRET_KEY_BASE,DATABASE_URL

script: |

docker pull rafaelgss/projects:blog-latest

docker kill blog_prod

docker rm blog_prod

docker run -d -p 4000:4000 --name blog_prod -e VIRTUAL_HOST="$VIRTUAL_HOST" -e SECRET_KEY_BASE="$SECRET_KEY_BASE" -e DATABASE_URL="$DATABASE_URL" -t rafaelgss/projects:blog-latest

Nginx - Docker

The Nginx configuration

upstream phoenix_upstream {

server 127.0.0.1:4000;

}

server {

listen [::]:80;

listen 80;

server_name blog.rafaelgss.com.br;

location ~ ^/(.*)$ {

proxy_pass http://phoenix_upstream/$1;

}

location / {

#try_files $uri $uri/ =404;

proxy_pass http://phoenix_upstream;

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Host $server_name;

}

}